TIAGO PRATIS MEDICE

CERTIFIED CLOUD ENGINEER

CORE SKILLS

Programming Languages: Java, Python, C#, JavaScript

Java Frameworks & Libraries: Spring (Integration, Security, Boot, Batch, AOP, JPA, JDBC Template, HATEOAS, GraphQL), JSP, JSF, Hibernate, EJB, JQuery

Cloud Platforms & Services: Google Cloud Platform (Cloud Endpoints, Cloud Functions, Google Kubernetes Engine, Cloud Run, Cloud Build, PUBSUB, Cloud SQL-PostgreSQL, BigQuery, Cloud Storage), Firebase, Openshift, Kibana, ElasticSearch, Splunk

CI/CD Tools: Jenkins, Team City, Git, Helm, Cloud Build

Build and Automation: Gradle, Maven, Docker

Web Services and APIs: REST, SWAGGER, WSDL, WADL, J2EE Web Services

Databases: BigQuery, Oracle, DB2, MongoDB, MySQL, PostgreSQL

Testing and Quality Assurance: Junit, JMeter, Mockito

Communication Protocols & Middleware: Kafka, WebSphere Application Server, WebLogic, XML

Enterprise Tools & Platforms: SharePoint, ODM, BPM (basic)

Methodologies: Agile, Scrum, Extreme Programming, Feature-Driven Development, Rapid Application Development, Systems Development Life Cycle

About Me

Personable professional whose strengths include cultural sensitivity and an ability to build rapport with a diverse workforce in multicultural settings, in advance positive company image through public presentations at universities and clients.

Knowledge-hungry learner, eager to meet challenges, confident, hard-working employee who is committed to achieving excellence

Highly motivated self-starter who takes initiative with minimal supervision.

Energetic performer consistently cited for unbridled passion for work, sunny disposition, and upbeat, positive attitude.

Productive worker with solid work ethic who exerts optimal effort in successfully completing tasks.

Highly adaptable, mobile, positive, resilient, patient risk-taker who is open to new ideas.

Resourceful team player who excels at building trusting relationships with customers and colleagues.

Exemplary planning and organizational skills, along with a high degree of detail orientation.

Goal-driven leader who maintains a productive climate and confidently motivates, mobilizes, and coaches employees to meet high performance standards.

Proven relationship-builder with unsurpassed interpersonal skills.

Highly analytical thinking with demonstrated talent for identifying, scrutinizing, improving, and streamlining complex work processes.

Exceptional listener and communicator who effectively conveys information verbally and in writing.

Prime Skills

- Google Cloud Platform

- Java

- Python

- RDBMS

- Nosql

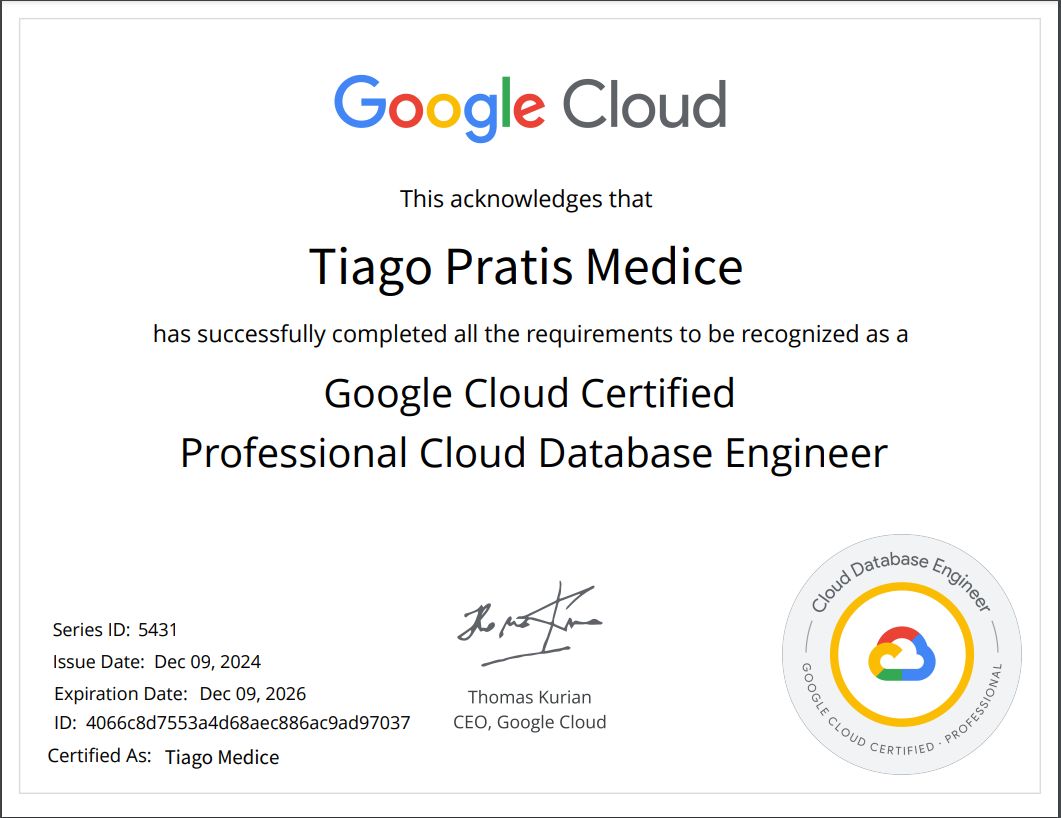

Certifications

- AWS Cloud Practitioner

- Issued Oct 2024 · Expires Nov 2027

- Professional Database Engineer

- Issued Dec 2024 · Expires Dec 2026

- Professional Cloud Architect

- Issued Feb 2024 · Expires Dec 2026

- Professional Cloud Developer

- Issued Dec 2023 · Expires Dec 2025

- Associate Cloud Engineer

- Issued Dec 2023 · Expires Dec 2026

- Associate Data Practitioner

- Issued Mar 2025 · Expires Mar 2028

- Cloud Digital Leader

- Issued Jan 2024 · Expires Jan 2027

MICROSERVICES: QUARKUS VS SPRING BOOT

In the era of containers (the ''Docker Age'') Java is still on top, but which is better? Spring Boot or Quarkus?

In the era of containers (the "Docker Age") Java still keeps alive, being struggling for it or not. Java has always been (in)famous regarding its performance, most of because of the abstraction layers between the code and the real machine, the cost of being multi-platform (Write once, run anywhere — remember this?), with a JVM in-between (JVM: software machine that simulates what a real machine does).

Nowadays, with the Microservice Architecture, perhaps it does not make sense anymore, nor any advantage, build something multi-platform (interpreted) for something that will always run on the same place and platform (the Docker Container — Linux environment). Portability is now less relevant (maybe more than ever), those extra level of abstraction is not important.

Having said that, let's perform a simple and raw comparison between two alternatives to generate Microservices in Java: the very well-known Spring Boot and the not so very well-know (yet) Quarkus.

Opponents

Who Is Quarkus?

An open-source set of technologies adapted to GraalVM and HotSpot to write Java applications. It offers (promise) a super-fast startup time and a lower memory footprint. This makes it ideal for containers and serverless workloads. It uses the Eclipse Microprofile (JAX-RS, CDI, JSON-P), a subset of Java EE to build Microservices.

GraalVM is a universal and polyglot virtual machine (JavaScript, Python, Ruby, R, Java, Scala, Kotlin). The GraalVM (specifically Substrate VM) makes possible the ahead-of-time (AOT) compilation, converting the bytecode into native machine code, resulting in a binary that can be executed natively.

Bear in mind that not every feature are available in native execution, the AOT compilation has its limitations. Pay attention at this sentence (quoting GraalVM team):

We run an aggressive static analysis that requires a closed-world assumption, which means that all classes and all bytecodes that are reachable at runtime must be known at build time.

So, for instance, Reflection and Java Native Interface (JNI) won't work, at least out-of-the-box (requires some extra work). You can find a list of restrictions at here Native Image Java Limitations document.

Who Is Spring Boot?

Really? Well, just to say something (feel free to skip it), in one sentence: built on top of Spring Framework, Spring Boot is an open-source framework that offers a much simpler way to build, configure and run Java web-based applications. Making of it a good candidate for microservices.

Battle Preparations — Creating the Docker Images

Quarkus Image

Let's create the Quarkus application to wrap it later in a Docker Image. Basically, we will do the same thing that the Quarkus Getting Started tutorial does.

Creating the project with the Quarkus maven archetype:

Dockerfile

mvn io.quarkus:quarkus-maven-plugin:1.0.0.CR2:create \

-DprojectGroupId=ujr.combat.quarkus \

-DprojectArtifactId=quarkus-echo \

-DclassName="ujr.combat.quarkus.EchoResource" \

-Dpath="/echo"

At the generated code, we have to change just one thing, add the dependency below because we want to generate JSON content.

Dockerfile

io.quarkus

quarkus-resteasy-jsonb

The Quarkus uses a JAX-RS specification throughout the RESTEasy project implementation.

Spring Boot Image

At this point, probably everyone knows how to produce an ordinary Spring Boot Docker image, let's skip the details, right? Just one important observation, the code is exactly the same. Better saying, almost the same, because we are using Spring framework annotations, of course. That's the only difference. You can check every detail in the provided source code (link down below).

Dockerfile

mvn install dockerfile:build

## Testing it...

docker run --name springboot-echo --rm -p 8082:8082 ujr/springboot-echo

The Battle

Let's launch both containers, get them up and running a couple of times, and compare the Startup Time and the Memory Footprint.

In this process, each one of the containers was created and destroyed 10 times. Later on, it was analyzed their time to start and its memory footprint. The numbers shown below are the average results based on all those tests.

Startup Time

Obviously, this aspect might play an important role when related to Scalability and Serverless Architecture.

Regarding Serverless architecture, in this model, normally an ephemeral container will be triggered by an event to perform a task/function. In Cloud environments, the price usually is based on the number of executions instead of some previous purchased compute capacity. So, here the cold start could impact this type of solution, as the container (normally) would be alive only for the time to execute its task.

In Scalability, it is clear that if it's necessary to suddenly scale-out, the startup time will define how long it will take until your containers to be completely ready (up and running) to answer the presented loading scenario.

How much more sudden it is the scenario (needed to and fast), worse can be the case with long cold starts.

Well, you may have noticed that it is one more option tested inserted in the Startup Time graph. Actually, it is exactly the same Quarkus application but generated with a JVM Docker Image (using the Dockerfile.jvm). As we can see even the application that it is using a Docker Image with JVM Quarkus application has a faster Startup Time than Spring Boot.

Needless to say, and obviously the winner, the Quarkus Native application it is by far the fastest of them all to start it up.

Microservices

Microservices is an architectural application pattern where loosely coupled, independently deployable services are used. Each service is responsible for a specific business function and communicates with other services over lightweight protocols.

Monolithic Architecture

In contrast, a Monolithic architecture is built as a single, tightly-coupled unit where functionalities are deployed together. A change in any module requires redeploying the entire application.

Challenges in Microservices Architecture

- Data Consistency: Maintaining consistency across distributed services can be challenging.

- Inter-Service Communication: Choosing the right communication protocols is critical.

- Distributed System Complexity: Managing multiple services, deployments, and versioning can increase system complexity.

- Monitoring and Logging: Comprehensive monitoring and logging are needed to track issues across services.

- Security: Managing authentication and authorization across multiple services.

How Spring Boot Simplifies Microservices

- Embedded servers like Tomcat or Jetty enable services to run independently.

- Autoconfiguration and starter dependencies reduce boilerplate code and simplify configuration.

- Spring Boot Actuator helps with monitoring microservices' health, metrics, and application status.

Inter-Service Communication in Microservices

Inter-service communication can be handled through:

- Synchronous methods: like REST APIs (using HTTP) or gRPC.

- Asynchronous methods: using messaging queues such as RabbitMQ and Kafka, improving decoupling, reliability, and scalability.

Types of Service Communication

- Internal Service: Service-to-service communication using DNS and ClusterIP, simple but limited in features.

- Service Mesh: Manages communication between microservices, adding advanced features like traffic management, security, and observability using sidecar proxies and a control plane.

Spring Boot

Spring Boot is a framework built on top of the Spring Framework, eliminating complex configuration files.

What is Spring Boot, and How Does It Differ from the Traditional Spring Framework?

Spring Boot is an extension of Spring that simplifies dependency management and configuration. It provides auto-configuration, embedded servers, and starter dependencies, reducing boilerplate code.

Auto-Configuration in Spring Boot

Auto-configuration automatically configures a Spring application based on JAR dependencies in the classpath. For example, with spring-boot-starter-web, Spring Boot automatically configures a web application environment.

Spring Boot Starters

Starters are predefined dependency bundles that provide necessary libraries to set up specific features or modules. For instance, spring-boot-starter-data-jpa includes dependencies for using JPA with Spring.

Handling Externalized Configuration

Spring Boot supports externalized configuration through .properties files, .yml files, environment variables, or command-line arguments, making it easy to change settings for different environments.

Application Properties

The application.properties or application.yml file is used to configure application-specific properties such as database connections, server port, and custom configurations.

Custom Configurations

Custom configurations can be defined with @Configuration and customized further by injecting property values with @Value or @ConfigurationProperties.

Spring Boot Actuator

Spring Boot Actuator provides operational features such as monitoring and health checking for production-ready applications. Some key endpoints include:

/actuator/health: Checks the application's health./actuator/metrics: Provides metrics about application performance./actuator/info: Displays application information.

Creating a RESTful Web Service

With the spring-boot-starter-web dependency, RESTful controllers can be created using the @RestController annotation, routing requests with @RequestMapping or @GetMapping, and returning JSON objects automatically.

Embedded Server in Spring Boot

Spring Boot includes embedded servers such as Tomcat or Jetty, allowing applications to be packaged as JAR files and run without an external server. The embedded server can be configured with properties such as server.port or by specifying the server type in pom.xml.

Securing a Spring Boot Application

Spring Security, included via spring-boot-starter-security, provides default security configurations. Customizations can be made using @EnableWebSecurity and configuring custom settings for authentication and authorization.

Managing Dependencies with Maven or Gradle

Spring Boot provides version management for common dependencies through its parent POM or spring-boot-dependencies, ensuring compatible library versions and reducing dependency conflicts.

The @SpringBootApplication Annotation

The @SpringBootApplication annotation combines:

@Configuration: Marks the class as a source of bean definitions.@EnableAutoConfiguration: Enables auto-configuration.@ComponentScan: Enables scanning for components in the package.

Spring Boot Profiles

Profiles allow different configurations for different environments (e.g., development, testing, production) and can be activated with spring.profiles.active in the application properties or via command-line arguments.

Database Configuration

Database settings are configured in application.properties using properties like spring.datasource.url, spring.datasource.username, and spring.datasource.password. Additional JPA settings can be added for Hibernate configuration.

Exception Handling

Spring Boot provides default exception handling and allows for global exception management using @ControllerAdvice and @ExceptionHandler annotations.

Running SQL Scripts on Startup

Spring Boot automatically runs SQL scripts like data.sql on startup. This file can be placed in src/main/resources to insert data at startup. To control DDL statements, provide a schema.sql file in the same location.

Spring Data JPA

Spring Data JPA is part of the Spring Data project, offering integration with the Java Persistence API (JPA) to simplify common data access tasks like CRUD operations, pagination, and query execution.

Benefits of Using Spring Data JPA over Native JPA

- Reduces boilerplate code by auto-generating queries for CRUD operations.

- Supports dynamic query methods based on method names.

- Provides built-in pagination and sorting support.

- Supports JPQL and native SQL queries using the

@Query annotation.

- Integrates easily with Spring Boot and other Spring components.

JpaRepository vs. CrudRepository

JpaRepository extends CrudRepository, adding additional functionality like pagination, sorting, flushing persistence context, and batch processing.

Key Annotations

- @Entity: Marks a class as a JPA entity, mapping it to a database table.

- @Id: Specifies the primary key field of the entity.

- @GeneratedValue: Defines the strategy for generating primary key values, such as AUTO, IDENTITY, SEQUENCE, and TABLE.

Creating Custom Queries with JPQL

Use the @Query annotation to define custom JPQL queries:

@Query("SELECT e FROM Employee e WHERE e.name = :name")

List<Employee> findEmployeesByName(@Param("name") String name);

@Transactional Annotation

The @Transactional annotation ensures that operations execute within a single transaction, where changes are either fully committed or rolled back, maintaining data consistency.

save() vs. saveAndFlush()

- save(): Changes are flushed at the end of the transaction.

- saveAndFlush(): Saves and immediately flushes changes to the database, committing them right away.

Pagination and Sorting

Pagination and sorting can be managed using the Pageable and Sort interfaces in JpaRepository.

JPA Fetch Types

- FetchType.EAGER: Loads associated entities immediately with the main entity.

- FetchType.LAZY: Loads associated entities only when accessed.

Spring Security

Spring Security is a customizable framework for handling authentication, authorization, and integration with technologies like OAuth2, JWT, and LDAP.

Core Concepts

- Authentication: Verifies the identity of a user.

- Authorization: Determines whether a user has permission to perform certain actions.

- CSRF Protection: Protects against Cross-Site Request Forgery.

- UserDetailsService: Core interface used to load user-specific data.

- GrantedAuthority and Role: Represents user roles and privileges.

Handling Authentication in Spring Security

Authentication is managed by delegating the process to an AuthenticationManager, which works with AuthenticationProvider to retrieve user details from a UserDetailsService and verify credentials. Upon successful authentication, an Authentication object is created and stored in the SecurityContextHolder.

UserDetailsService in Spring Security

The UserDetailsService interface loads user-specific data during authentication. Its loadUserByUsername(String username) method returns a UserDetails object containing user credentials and authorities:

@Service

public class MyUserDetailsService implements UserDetailsService {

@Override

public UserDetails loadUserByUsername(String username) throws UsernameNotFoundException {

return new User("john", "{noop}password", Collections.singletonList(new SimpleGrantedAuthority("ROLE_USER")));

}

}

GrantedAuthority vs. Role

- GrantedAuthority: Represents permissions or privileges that the user has, such as

"READ_PRIVILEGE" or "WRITE_PRIVILEGE".

- Role: A group of authorities, like

"ROLE_ADMIN" or "ROLE_USER".

Managing Security Context Across Requests

Spring Security uses SecurityContextHolder to store the security context, which contains details of the authenticated user. It typically uses a session to persist the security context across multiple requests.

PasswordEncoder

The PasswordEncoder interface is used to encode and verify passwords securely. Common implementations include BCryptPasswordEncoder and NoOpPasswordEncoder to securely hash passwords before saving them.

Spring Security Filters

Spring Security uses a chain of filters to process authentication and authorization logic, with each filter executed in a specific order.

Securing REST APIs

@Configuration

public class SecurityConfig extends WebSecurityConfigurerAdapter {

@Override

protected void configure(HttpSecurity http) throws Exception {

http.csrf().disable()

.authorizeRequests()

.antMatchers("/api/admin/**").hasRole("ADMIN")

.antMatchers("/api/user/**").hasRole("USER")

.and()

.httpBasic();

}

}

Protection Against CSRF Attacks

Spring Security protects against CSRF by generating a unique token for each session, which must be submitted with each state-changing request (such as POST, PUT, or DELETE).

Implementing Custom Authentication Logic

@Component

public class CustomAuthenticationProvider implements AuthenticationProvider {

@Override

public Authentication authenticate(Authentication authentication) throws AuthenticationException {

String username = authentication.getName();

String password = authentication.getCredentials().toString();

if (validCredentials(username, password)) {

return new UsernamePasswordAuthenticationToken(username, password, new ArrayList<>());

} else {

throw new BadCredentialsException("Invalid Credentials");

}

}

@Override

public boolean supports(Class authentication) {

return UsernamePasswordAuthenticationToken.class.isAssignableFrom(authentication);

}

}

Disabling Security for Specific Endpoints

http.authorizeRequests()

.antMatchers("/public/**").permitAll() // No security on public endpoints

.anyRequest().authenticated();

WebFlux Security Configuration

@Configuration

@EnableWebFluxSecurity

public class SecurityConfig {

@Bean

public SecurityWebFilterChain securityWebFilterChain(ServerHttpSecurity http) {

http

.cors() // Enable CORS

.and()

.csrf()

.csrfTokenRepository(CookieServerCsrfTokenRepository.withHttpOnlyFalse()) // Store CSRF token in a cookie

.and()

.authorizeExchange()

.pathMatchers("/api/public/**").permitAll() // Ignore CORS and CSRF for public APIs

.pathMatchers("/api/test").permitAll() // Allow public access to /api/test

.anyExchange().authenticated(); // Require authentication for other requests

return http.build();

}

@Bean

public CorsWebFilter corsWebFilter() {

CorsConfiguration configuration = new CorsConfiguration();

configuration.setAllowedOrigins(Arrays.asList("http://localhost:3000")); // Set your front-end app URL, ENV VARS are suggested

configuration.setAllowedMethods(Arrays.asList("GET", "POST", "PUT", "DELETE"));

configuration.setAllowedHeaders(Arrays.asList("Authorization", "Content-Type"));

configuration.setAllowCredentials(true);

configuration.setMaxAge(3600L); // Cache preflight response for an hour

UrlBasedCorsConfigurationSource source = new UrlBasedCorsConfigurationSource();

source.registerCorsConfiguration("/**", configuration);

return new CorsWebFilter(source);

}

}

Example Security Configuration (Spring Boot) for JWT Without CSRF

@Configuration

@EnableWebSecurity

public class SecurityConfig {

@Bean

public SecurityFilterChain securityFilterChain(HttpSecurity http) throws Exception {

http

.csrf().disable() // Disable CSRF because we're stateless

.authorizeHttpRequests(auth -> auth

.requestMatchers("/api/public/**").permitAll() // Public endpoints, no JWT needed

.anyRequest().authenticated() // All other endpoints require authentication

)

.oauth2ResourceServer()

.jwt(); // Use JWT for OAuth2 Resource Server

return http.build();

}

}

Apache Kafka

Apache Kafka is a distributed streaming platform used to publish, subscribe to, store, and process streams of records in real-time.

- Producers: Publish messages to Kafka topics.

- Topics: Categories or feeds to which messages are sent.

- Brokers: Kafka servers that store data and serve clients.

- Consumers: Read messages from Kafka topics.

Zookeeper: Manages and coordinates Kafka brokers.

Messages in Kafka are stored in topics and divided into partitions for scalability. Each partition is an ordered, immutable sequence of messages that is continually appended to. Kafka guarantees message durability and fault tolerance by replicating partitions across multiple brokers.

Concept of Kafka’s "Offset"

An offset is a unique identifier for each message within a partition.

- Message Tracking: Consumers use offsets to track which messages they have processed. Each consumer maintains its own offset.

- Consumer Groups: Kafka allows multiple consumers to form a group to share the load of processing messages from a topic.

- Offsets can be managed automatically or manually.

How Does Kafka Ensure Message Durability and Fault Tolerance?

- Replication: Each partition in Kafka is replicated across multiple brokers (replicas).

- Acknowledgements: Producers can configure the level of acknowledgment required from brokers before considering a message as successfully written.

- Durable Storage: Kafka stores messages on disk with a configurable retention period. Messages are not deleted until the retention period expires or until the log is compacted (in the case of compacted topics).

Zookeeper in Kafka

Zookeeper is a distributed coordination service used to manage and coordinate brokers, topics, partitions, and consumer group offsets.

- Leader Election: Electing leaders for partitions.

- Broker Metadata Management: Keeping track of broker information and configuration.

- Configuration Management: Storing and managing Kafka configuration and state.

Kafka Configuration

@Configuration

@EnableKafka

@Bean

public ProducerFactory<String, User> producerFactory() {

Map<String, Object> config = new HashMap<>();

config.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

config.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class); // For Key

config.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, JsonSerializer.class); // For Value (POJO)

return new DefaultKafkaProducerFactory<>(config);

}

@Bean

public KafkaTemplate<String, User> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

// Consumer configuration for reading POJO (User class)

@Bean

public ConsumerFactory<String, User> consumerFactory() {

Map<String, Object> config = new HashMap<>();

config.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

config.put(ConsumerConfig.GROUP_ID_CONFIG, "group_id");

config.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class); // For Key

config.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, JsonDeserializer.class); // For Value (POJO)

config.put(JsonDeserializer.TRUSTED_PACKAGES, "com.package"); // Trust the package containing your POJO

return new DefaultKafkaConsumerFactory<>(config, new StringDeserializer(), new JsonDeserializer<>(User.class));

}

@Bean

public ConcurrentKafkaListenerContainerFactory<String, User> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, User> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

return factory;

}

KafkaProducer vs KafkaConsumer

The KafkaProducer is responsible for sending messages to a Kafka topic, while the KafkaConsumer is responsible for reading those messages.

// KafkaProducer Example

private static final String TOPIC = "my_topic";

@Autowired

private KafkaTemplate<String, User> kafkaTemplate;

public void sendUser(User user) {

kafkaTemplate.send(TOPIC, user);

}

// KafkaConsumer Example

@KafkaListener(topics = "user_topic", groupId = "group_id", containerFactory = "kafkaListenerContainerFactory")

public void consumeUser(User user) {

}

User Class Definition:

public class User implements Serializable {

private String id;

private String name;

private String email;

private Address address;

}

Spring Batch

Spring Batch is a lightweight, comprehensive framework designed for building robust, large-scale batch processing applications.

- Job: A batch job composed of one or more steps.

- Step: A phase within a job.

- ItemReader: Reads input data, such as from a database, file, or message queue.

- ItemProcessor: Processes each item read by the ItemReader.

- ItemWriter: Writes the processed data to the output, like a file or database.

- JobRepository: Stores metadata about jobs, including job executions, steps, and statuses.

- JobLauncher: Starts jobs and manages job executions.

Chunk-Oriented Step vs Tasklet in Spring Batch

- Chunk-Oriented Step: The data is read, processed, and written in chunks. An

ItemReader reads items, ItemProcessor processes them, and ItemWriter writes them in bulk.

Example: Read 1000 records from a file, process them, and write them to a database in chunks of 10.

- Tasklet: Performs a single, specific task, such as executing a script or deleting files. A tasklet doesn’t involve chunking and is used for one-time or customizable operations.

Fault-Tolerance and Retry in Spring Batch

Spring Batch offers various mechanisms for fault tolerance and retries during job execution.

Managing and Restarting Failed Jobs in Spring Batch

Spring Batch has built-in support for restarting failed jobs. When a job fails, its execution status is stored in the JobRepository. This allows the job to restart from the failure point without reprocessing the entire dataset.

Job Instance and Job Execution in Spring Batch

Job execution in Spring Batch is associated with a Job Instance. If a job fails, it can be restarted with the same job parameters, creating a new Job Execution for the instance.

- Step Execution Context: Saves the state of each step in the

ExecutionContext, including the last processed item. Upon job restart, this context allows resuming from the failure point.

Java Streams API

The Java Streams API provides a powerful tool for processing collections of data using functional programming principles. It allows operations like filtering, mapping, and reducing data without the need for manual loops or iteration.

// Filtering even numbers

List<Integer> numbers = Arrays.asList(1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

List<Integer> evenNumbers = numbers.stream().filter(n -> n % 2 == 0).collect(Collectors.toList());

// Reducing to a single value (sum)

List<Integer> numbers = Arrays.asList(1, 2, 3, 4, 5);

int sum = numbers.stream().reduce(0, (a, b) -> a + b);

// Finding the first element that starts with "b"

List<String> words = Arrays.asList("apple", "banana", "cherry", "date");

Optional<String> result = words.stream().filter(word -> word.startsWith("b")).findFirst();

// Processing elements in parallel

List<Integer> numbers = Arrays.asList(1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

numbers.parallelStream().forEach(System.out::println);

// Sorting in reverse order

List<String> names = Arrays.asList("John", "Alice", "Bob", "Charlie");

List<String> sortedNames = names.stream().sorted(Comparator.reverseOrder()).collect(Collectors.toList());

Throwable, Error, and Exception in Java

Throwable is the superclass of all errors and exceptions in Java.

- Error: Represents severe issues that applications should not attempt to handle (e.g.,

OutOfMemoryError, StackOverflowError).

- Exception: Represents recoverable issues an application might want to handle (e.g.,

IOException, NullPointerException).

Checked Exceptions: Must be caught or declared; they represent predictable problems like IOException, SQLException.

Unchecked Exceptions: Do not need to be caught or declared; they indicate runtime issues like NullPointerException, ArrayIndexOutOfBoundsException.

Object

└── Throwable

├── Error

│ └── OutOfMemoryError, StackOverflowError, etc.

└── Exception

├── IOException (Checked Exception)

└── RuntimeException (Unchecked Exception)

├── NullPointerException

└── ArrayIndexOutOfBoundsException

Abstract Class vs Interface

Abstract classes and interfaces are used to define templates for classes in Java, but they differ in purpose and implementation.

Abstract Class: Can include method implementations. Subclasses inherit both abstract and concrete methods.

abstract class Animal {

void eat() {

System.out.println("Eating...");

}

abstract void makeSound();

}

class Dog extends Animal {

@Override

void makeSound() {

System.out.println("Woof!");

}

}

Interface: Defines a contract that implementing classes must fulfill. All methods in an interface are abstract by default.

public interface Sender {

void send(File fileToBeSent);

}

public class ImageSender implements Sender {

@Override

public void send(File fileToBeSent) {

}

}

Try-with-Resources

The try-with-resources statement, introduced in Java 7, simplifies resource management by automatically closing resources like files, database connections, and sockets when they are no longer needed. It works with resources that implement the AutoCloseable interface.

try (ResourceType resource = new ResourceType()) {

// Use resource

} catch (ExceptionType e) {

// Handle exception

}

Java Collections Overview

Java Collections Framework provides a set of classes and interfaces for managing and organizing groups of objects in various structures like Map and Set.

Map Implementations

- HashMap: Does not guarantee any specific order of elements. Typically offers better performance than

LinkedHashMap since it doesn’t maintain element order.

- LinkedHashMap: Maintains insertion order, so elements are iterated in the order they were added.

Set Implementations

- HashSet: Does not guarantee order of elements. Allows

null values.

- TreeSet: Orders elements in natural order (e.g., ascending for numbers, alphabetical for strings). Does not allow

null elements.

Thread-Safe Collections

ConcurrentHashMapSynchronizedHashMapVectorHashtableCopyOnWriteArrayList and CopyOnWriteArraySetStack

HashMap, HashSet, and ArrayList

hashCode() and equals() methods are crucial for object comparison and storage in hash-based collections.

HashMap

When adding a key-value pair, hashCode() finds the bucket, and equals() checks for duplicate keys within that bucket.

HashSet

When adding an element, hashCode() finds the bucket. If the bucket contains elements, equals() checks for duplicates. If a duplicate is found, it won’t be added.

Best Practices

- Consistency: Ensure that if two objects are equal via

equals(), their hashCode() values should also be the same.

- Immutability: Fields used in

equals() and hashCode() should be immutable to maintain consistency.

- Avoid Randomness:

hashCode() should consistently return the same value for the same object state.

ArrayList vs LinkedList

ArrayList

Backed by: A dynamic array.

Access time (get/set): Fast (O(1) for get(index)).

Insert/remove (middle): Slow (O(n)) — requires shifting elements.

Insert/remove (end): Fast (O(1) amortized for add at end).

Memory: Uses less memory than LinkedList.

✅ Best for:

Random access.

Frequent reading.

Appending items

LinkedList

Backed by: A doubly-linked list.

Access time (get/set): Slow (O(n)) — must traverse nodes.

Insert/remove (middle or ends): Fast (O(1) if node is known, otherwise O(n)).

Memory: Uses more memory (because of node pointers).

✅ Best for:

Frequent insertion/removal, especially at the beginning or middle.

Queue or deque (double-ended queue) operations.

REST vs SOAP

REST

REST is an architectural style that uses standard HTTP methods (GET, POST, PUT, DELETE) for CRUD operations and commonly exchanges data in JSON format.

Security: Typically relies on HTTPS, OAuth, and JWT for security.

SOAP

SOAP is a protocol with a strict XML structure, often used for enterprise applications, and can use various transport protocols like HTTP, HTTPS, SMTP, or JMS.

Security: Uses WS-Security, supporting message-level security with encryption and authentication.

1. PROTOCOL/STANDARD

REST:

Not a protocol, but an architectural style.

Relies on standard HTTP methods (GET, POST, PUT, DELETE, etc.).

Data formats: JSON (commonly), XML, HTML, plain text, etc.

SOAP:

A protocol with strict standards defined by W3C.

Operates over protocols like HTTP, SMTP, or others.

Data format: Exclusively XML.

2. Simplicity

REST:

Lightweight and simpler to implement.

Easy to consume due to its reliance on URLs and standard HTTP.

SOAP:

More complex due to strict standards.

Requires a SOAP envelope and compliance with its extensive XML schema.

3. Performance

REST:

Faster as it uses lightweight formats (e.g., JSON).

Less bandwidth-intensive.

SOAP:

Slower due to verbose XML messages and strict compliance.

More bandwidth required.

4. Flexibility

REST:

More flexible and can work with multiple formats like JSON, XML, etc.

Supports multiple data types and communication styles.

SOAP:

Limited to XML format for message exchange.

Offers less flexibility in terms of data and transport options.

5. Use Cases

REST:

Preferred for public APIs, mobile applications, microservices, and cloud-based services.

Suitable for CRUD (Create, Read, Update, Delete) operations.

SOAP:

Best suited for enterprise-level applications needing high security and transactional reliability.

Common in banking, telecommunication, and financial services where ACID (Atomicity, Consistency, Isolation, Durability) properties are essential.

6. Security

REST:

Relies on HTTPS for basic security.

Additional layers like OAuth are required for enhanced security.

SOAP:

Built-in security features (WS-Security) for encryption, authentication, and secure transactions.

Better suited for applications needing rigorous security.

7. State Management

REST:

Stateless by design.

Each request is independent, with no session storage on the server.

SOAP:

Supports both stateful and stateless operations.

8. Error Handling

REST:

Uses HTTP status codes (e.g., 404 Not Found, 500 Internal Server Error) for error handling.

SOAP:

Provides detailed error reporting via its fault element in the XML response.

Java Version Features

- Java 8: Introduced Lambdas and the Streams API.

- Java 10: Added local variable type inference with

var for more concise code.

- Java 11: New string methods like

isBlank(), lines(), and strip().

- Java 13: Improved text blocks for multi-line strings.

- Java 14: Added pattern matching for

instanceof.

- Java 15: Introduced sealed classes for more control over inheritance.

- Java 16: Added support for UNIX domain sockets.

- Java 17: New long-term support (LTS) version with enhanced random number generation via the

RandomGenerator interface.

Docker Overview

Docker is an open-source platform that automates application deployment inside lightweight, portable containers, ensuring consistency across environments. Containers isolate applications and their dependencies, providing a self-contained runtime environment.

Core Docker Components

- Docker Engine: Core part of Docker responsible for building and running containers.

- Docker Daemon: A background service that manages Docker containers.

- Docker CLI: Command-line interface for interacting with Docker.

- Docker Images: Immutable snapshots of an application and its dependencies, used to create containers.

- Docker Containers: Running instances of Docker images, providing isolated environments for applications.

- Dockerfile: A text file with instructions for building a Docker image.

- Docker Hub: A cloud-based registry where Docker images are stored and shared.

Docker vs Virtual Machines (VMs)

- Docker Containers: Containers share the host OS kernel and are more lightweight as they only include the application and its dependencies. They offer faster startup times and lower resource consumption, with process-level isolation.

- Virtual Machines (VMs): VMs run their own guest OS on top of the host OS, providing stronger isolation but consuming more resources.

Data Persistence in Docker

Containers are ephemeral, meaning data is lost when a container is removed. To persist data, Docker offers two main options:

- Docker Volumes: Docker-managed storage locations that persist data beyond a container’s lifecycle.

- Bind Mounts: Links a directory on the host to a directory inside the container, allowing persistent storage of container data.

HTTP Methods Overview

- GET: Retrieves data from the server. Idempotent: Yes, data is sent via the URL query string.

- POST: Submits data to the server, causing a state change or side effect. Idempotent: No, data is in the request body.

- PATCH: Partially modifies a resource. Idempotent: No.

- PUT: Updates or replaces a resource on the server. Idempotent: Yes, data is in the request body.

- DELETE: Removes a resource from the server. Idempotent: Yes, typically specified in the URL.

- HEAD: Retrieves headers for a resource, without the body. Idempotent: Yes, similar to GET but only headers are returned.

Apache Airflow

Apache Airflow is an open-source platform primarily used for workflow orchestration, particularly popular in data engineering and data science to manage data pipelines and automate tasks.

Key Concepts

- Directed Acyclic Graphs (DAGs): A DAG represents a set of tasks with defined execution order and dependencies. Each task in a DAG is a node, and edges define the task sequence.

- Workflow Definition: Workflows are defined as Python code, allowing flexible programming constructs like loops and conditionals.

- Scheduler: Airflow's built-in scheduler triggers workflows based on time or event-based conditions.

- Parallel Task Execution: Tasks without dependencies can be executed in parallel, optimizing workflow execution.

Executors in Airflow

Airflow supports several executors for scaling and distributing tasks:

- LocalExecutor: Runs tasks locally on a single machine.

- CeleryExecutor: Distributes tasks across a cluster using Celery.

- KubernetesExecutor: Executes tasks in a Kubernetes cluster, enhancing scalability.

Use Cases

- ETL Pipelines: Automating data extraction, transformation, and loading.

- Data Engineering Workflows: Managing complex workflows with task dependencies.

- Machine Learning Pipelines: Automating data preparation, model training, and deployment.

- Recurring Tasks: Scheduling jobs for regular tasks like report generation and backups.

Swagger and OpenAPI

What is Swagger?

Swagger is an open-source framework designed for creating, documenting, and consuming RESTful web services. It provides a standard way to define APIs with tools for designing and documenting APIs interactively.

What is OpenAPI?

The OpenAPI Specification (OAS) is an industry standard for defining REST APIs, initially developed by Swagger but now managed independently by the OpenAPI Initiative. Swagger's tools (e.g., Swagger UI, Swagger Editor) use OAS as the format for defining API specifications in JSON or YAML.

Key Swagger/OpenAPI Annotations in Java

- @Operation: Describes an API operation, including a summary, description, etc.

- @Parameter: Specifies details of an API parameter (e.g., query, path).

- @ApiResponse: Describes possible responses, including status codes.

- Example:

@ApiResponse(responseCode = "200", description = "Successful retrieval")

- @Schema: Defines model structures used in request/response payloads.

- @Tag: Groups related APIs for better organization.

- Example:

@Tag(name = "User Management", description = "Operations related to user management")

Key Components of an OpenAPI/Swagger File

- Paths: Define API endpoints and their methods.

- Parameters: Inputs to the API like query parameters and headers.

- Responses: Define expected response codes and data formats.

- Schemas: Outline the structure of request and response bodies.

- Security: Specify methods for API security (e.g., OAuth2).

- Tags: Group related operations for organization.

OpenAPI 3.x vs. Swagger 2.0

OpenAPI 3.x added improvements over Swagger 2.0:

- Request Bodies: A dedicated

requestBody component, whereas Swagger 2.0 included body data in parameters.

- Enhanced Support for Polymorphism: Using

allOf, oneOf, and anyOf for inheritance and polymorphism:

allOf: Combines multiple schemas.oneOf: Requires only one schema to be valid.anyOf: Allows any of the listed schemas to be valid.

Keeping OpenAPI Documentation in Sync with Code

- API-First Approach: Define the OpenAPI spec before development.

- Annotations: Generate API specs from code annotations (e.g., with Springfox).

- CI/CD Integration: Automate API validation and generation in your CI/CD pipeline using tools like Swagger Codegen or OpenAPI Generator.

Maven vs. Gradle

Maven

Maven is a centralized dependency management system based on the pom.xml configuration file. Its XML structure is rigid and verbose, which can make complex build configurations harder to manage.

Gradle

Gradle uses Groovy or Kotlin as its Domain-Specific Language (DSL) for configuration (build.gradle or build.gradle.kts for Kotlin). It is more concise and flexible, allowing for easier definition of complex builds with less code. Generally, it is faster than Maven because it supports incremental builds.

Feature Comparison

| Feature |

Maven |

Gradle |

| Configuration |

XML (POM) |

Groovy/Kotlin (DSL) |

| Approach |

Convention over configuration |

Convention + flexibility |

| Performance |

Slower |

Faster |

| Dependency Management |

Rigid, centralized |

Dynamic, flexible |

| Multi-Project Builds |

Less flexible |

Highly flexible |

| Build Script |

Verbose XML |

Concise DSL |

| Use Cases |

Enterprise Java, standard builds |

Android, modern JVM projects |

Kubernetes

Pod

A Pod is the smallest deployable unit and a single instance of a running process that can host multiple containers.

Service

Kubernetes Service acts as a discovery proxy for Pods to be exposed as a network service:

- ClusterIP (default): Exposes the service on a cluster-internal IP, accessible only within the cluster.

- NodePort: Exposes the service on each node’s IP address at a static port, allowing external access.

- LoadBalancer: Exposes the service externally using a cloud provider’s load balancer.

- Service Discovery: Kubernetes provides a built-in DNS server that maps service names to the corresponding Pod IP addresses.

Deployment

A Deployment defines a desired state for Pods and ReplicaSets, ensuring that a specified number of Pod replicas are running at any given time.

ReplicaSet

A ReplicaSet is responsible for maintaining a stable set of replica Pods running at any given time.

DaemonSet

A DaemonSet ensures that a copy of a Pod runs on every node (or on selected nodes).

StatefulSet

A StatefulSet is used to manage stateful applications. It is useful for databases and other applications requiring persistent storage, unique network identifiers, and ordered deployment or scaling. Each Pod in a StatefulSet has a unique, persistent identity and storage.

Volume

A Kubernetes Volume provides storage for data that containers in a Pod can access:

- emptyDir: A temporary directory that lives for the lifespan of the Pod.

- hostPath: Maps a directory on the host node to the container.

- persistentVolume (PV): A persistent storage resource provisioned in the cluster.

- persistentVolumeClaim (PVC): A request for storage by a user.

ConfigMap

A ConfigMap is used to store configuration data in key-value pairs.

Secret

Secrets are used to store sensitive information such as passwords, OAuth tokens, or SSH keys.

Namespace

Namespaces provide a way to divide cluster resources between multiple users or teams, useful for creating virtual clusters to organize resources and workloads.

Ingress

Ingress is an API object that manages external access to services within a cluster, typically HTTP/S.

Kubelet

Kubelet is an agent that runs on each node in Kubernetes, ensuring that containers described in PodSpecs are running and healthy. It communicates with the control plane (API server) to report node status, monitor Pods, and execute container workloads.

Kube-Proxy

Kube-proxy is a network proxy that runs on each node and handles network communications both within the cluster and between external clients and services. It manages network rules on each node to enable communication between Pods or expose services outside the cluster.

Controller Manager

The Controller Manager is a daemon responsible for running various controllers that regulate the state of the cluster:

- Node Controller: Monitors node availability.

- Replication Controller: Ensures the correct number of Pods are running.

- Endpoint Controller: Populates the Endpoints object to join Services and Pods.

- ServiceAccount & Token Controllers: Manage default service accounts and API tokens.

Scheduler

The Scheduler is responsible for assigning Pods to nodes based on resource availability, node constraints, and other factors, ensuring efficient and balanced workload distribution across nodes. It considers Pod requirements such as CPU, memory, node labels, and affinity rules.

API Server

The API Server is the central management component in Kubernetes, providing the main entry point to the control plane. It handles REST API requests for creating, updating, and deleting Kubernetes resources.

Etcd

Etcd is a distributed, consistent key-value store used by Kubernetes to store all cluster state data. It ensures that the cluster state is saved and highly available, making it critical for the correct operation of the Kubernetes control plane.

Horizontal Pod Autoscaler (HPA)

HPA automatically scales the number of Pods in a deployment based on observed CPU/memory or other custom metrics, optimizing resource utilization by adjusting the number of replicas to match the workload.

Summary

Pods are the smallest deployable units and hold containers. Services provide a stable network identity for accessing Pods. Deployments help manage the desired state of Pods, including rolling updates and scaling. Volumes and PersistentVolumes manage storage for Pods. ConfigMaps and Secrets provide configuration and sensitive data to applications. Kubelet, Kube-proxy, and other control plane components ensure that the desired cluster state is enforced.

Steps for Service-to-Service Communication Across Namespaces with NetworkPolicies

The NetworkPolicy specifies which pods are allowed to communicate with other pods or external entities and can control ingress (incoming) and egress (outgoing) traffic. It operates at the network level, blocking or allowing traffic based on IP addresses, pod selectors, or namespace selectors.

Allowing Service-to-Service Communication Across Namespaces

Consider the following scenario:

- Namespace A has a service named frontend.

- Namespace B has a service named backend.

NetworkPolicy for Backend Service

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-frontend-to-backend

namespace: namespace-b

spec:

podSelector:

matchLabels:

app: backend

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

name: namespace-a

podSelector:

matchLabels:

app: frontend

ports:

- protocol: TCP

port: 80

NetworkPolicy for Frontend Service

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-frontend-egress

namespace: namespace-a

spec:

podSelector:

matchLabels:

app: frontend

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

name: namespace-b

podSelector:

matchLabels:

app: backend

ports:

- protocol: TCP

port: 80

To access the backend service from the frontend service, use the following format:

<service-name>.<namespace-name>.svc.cluster.local

Example:

http://backend.namespace-b.svc.cluster.local:80

RDBMS vs NoSQL

Data Model

RDBMS:

- Uses a structured, tabular model where data is organized into rows and columns (tables).

- Relationships between tables are defined using foreign keys.

- Follows ACID (Atomicity, Consistency, Isolation, Durability) principles to maintain data integrity.

NoSQL:

- Supports a more flexible, schema-less data model.

- Document-based (e.g., MongoDB).

- Key-value pairs (e.g., Redis).

- Column-family (e.g., Cassandra).

Scalability

RDBMS:

- Traditionally, RDBMSs scale vertically by adding more power (CPU, RAM) to a single server.

- Horizontal scaling (across multiple servers) can be challenging due to the complex nature of relationships between tables.

NoSQL:

- Built to scale horizontally.

Query Language

RDBMS:

- Uses SQL (Structured Query Language), which is standardized for querying and managing relational databases.

- SQL supports complex joins, aggregations, and multi-table operations.

NoSQL:

- MongoDB uses a document-based query language similar to JSON.

- Cassandra uses CQL (Cassandra Query Language).

Continuous Integration (CI)

Continuous Integration (CI) is the practice of automating the integration of code changes from multiple developers into a single codebase several times a day.

Benefits of CI

- Early Detection of Issues

- Fast Feedback Loop

- Consistent Code Quality

The CI server runs automated unit tests, static code analysis, and sometimes even builds the application.

Continuous Deployment (CD) or Continuous Delivery

In Continuous Deployment, code is always ready to be deployed to production at any time, focusing on creating a releasable artifact for every change.

Process

- After CI completes (builds/tests pass), the application is packaged as a deployable artifact (e.g., Docker image, .war file).

- Continuous Delivery: Wait for manual approval before production deployment.

- Continuous Deployment: Automatically deploy to production.

MIGRATING ENTERPRISE DATABASES TO THE CLOUD

CLIENT SERVER

client server databases air harder to move because there is more logic provided by historic procedures that needs to be verified and tested

TIER

Three tier or into your applications have most of the business logic in the application code.

So there is less testing required in the database here also there tend to be fewer dependents.Because the clients connected the application server, which in turn connects to the database.

SERVICE ORIENTED

Service oriented applications can be the easiest to move to the cloud because the database and all its details are hidden behind the service layer..

The service can be used to synchronize the source and target databases during the migration.

IMPLEMENTATION METHODOLOGY

ASSESSMENT PHASE

The assessment phase is where you determine the requirements and dependencies to migrate your apps to Google Cloud.

The assessment phase is crucial for the success of your migration.

You need to gain deep knowledge about the apps you want to migrate, their requirements, their dependencies, and your current environment.

ASSESSMENT PHASE STEPS 6

First, building a comprehensive inventory of your apps.

Second, cataloging your apps according to their properties and dependencies.

Third, is training and educating your teams on Google Cloud.

Fourth, building an experiment and proof-of-concept on Google Cloud.

Fifth, calculating the total cost of ownership of the target environment.

Finally, six, choosing the workloads that you want to migrate first.

PLAN PHASE

A comprehensive list of the use cases that your app support, including uncommon ones and corner cases.

All the requirements for each use case, such as performance and scalability requirements.

Expected consistency guarantees, fail over mechanisms, and network requirements.

A potential list of technologies and products that you want to investigate and test.

A POC will help you calculate the total cost of ownership of a Cloud solution.

When you have a clear view of the resources you need in the new environment, you can build a total

cost of ownership model that lets you compare your cost on Google Cloud with the cost of your current environment.

DEPLOY PHASE

A key aspect of making your deployments successful is Automation.

If you're creating your resources manually, your projects will take much longer, maintenance will be harder, change will be more difficult, and your projects will be more prone to human error.

OPTIMIZATION PHASE

Optimization is an ongoing process of continuous improvement.

You optimize your environment as it evolves to avoid uncontrolled and duplicative efforts, you can set measurable optimization goals and stop when you meet those goals.

GOOGLE IMPLEMENTATION METHODOLOGY

They are scheduled maintenance, continuous replication, split reading and writing, and data access microservice.

SCHEDULED MAINTENANCE

Use scheduled maintenance if you can tolerate some down time.

Define a time window when the database and applications will be unavailable.

Migrate the data to the new database, then migrate client connections.

Lastly, turn everything back on.

CONTINUOUS REPLICATION

Continuous replication uses your database's built-in replication tools to synchronize the old database to the new database.

This is relatively simple to set up and can be done by your database administrator.

There are also third party tools like stream that will automate this process.

Eventually, you will move the client connections from the old database to the new one.

Then you can turn off the replication and retire the old site.

SPLIT READING AND WRITING

With split reading and writing, the clients read and write to both the old and new databases for some amount of time.

Eventually, you can retire the old database.

Obviously, this requires code changes on the client.

You would only do this when you are migrating to different types of databases, for example, if you're migrating from Oracle to Spanner.

If you're migrating from Oracle on-premises to Oracle on Google Cloud Bare Metal Solution, continuous replication would make more sense.

DATA ACCESS MICROSERVICE

All data access is encapsulated or hidden behind the service.

First, migrate all client connections to the service.

The service then handles migration from the old to the new database.

Essentially, this makes split reading and writing seamless to the clients.

MIGRATION STRATEGIES

LIFTING SHIFT

Use scheduled maintenance if you can tolerate some down time.

Lifting shift means you're moving an application or database as is, into your new Cloud environment.This is often an easy and effective way of migrating Databases and other applications as well.

Monolithic applications like a WordPress site, for example, might be good candidates for a lift and shift approach.

Impurity lifting shift is simple

Create an image of the Virtual Machine in the current environment. Then export the image from the current environment and copy it to a Google Cloud Storage bucket.

Create a Compute Engine image from the exported image. Once you have a Compute Engine image, use it to create your virtual machine.

Machines are moved very quickly and then there data is streamed into Google Cloud before it becomes life

VM migration

Identify a set of VMs to migrate first, prepare the VMs, migrate them, and then test to verify that they are migrated correctly

MigVisor

assessment tool that helps identify dependencies and dependence and agreed recommend target services and databases based on the analysis.

Striim

is an online database Migration tool.

BACKUP AND RESTORE

Perform a SQL backup on the source database, then copy the backup files into Google Cloud.

Then run a restore on the new target database server.

LIVE DATABASE MIGRATION

Switch databases with no downtime, Using Database replication can minimize downtime.

First, configure the existing database as main.

Second, create the new database and configure it as the replica.

Third, the main synchronizes the data with the replica.

Fourth, migrate the clients to the replica and promote it to the main.

------------------------------------

Migrate large numbers of clients with no downtime by using a data access service.

First, create a service that encapsulates all data access.

Second, migrate clients to use the service, rather than connecting to the database.

Third, once all clients are updated, the service is the only direct database client.

Fourth, replicate the database and then migrate the service connection.

Blue/Green deployments to migrate data access services from on-premises to the cloud, reduce the risk of a migration by allowing quick revert back to the older service.

This is the element with id "load"